When A/B Testing Your Website Is A Bad Idea?

When A/B Testing Your Website Is A Bad Idea?

A/B testing has been a valuable tool for marketers and researchers seeking to improve digital properties for well over a decade. Today, publishers are leveraging new testing technologies to conduct A/B tests on everything from site layouts to brand imagery. I’m willing to bet that more A/B tests are being conducted on websites across the globe now than ever before.

Unfortunately, A/B testing is often used as a gold-standard for decision-making in instances that it actually is sort of a blunt tool. How can data-driven decisions be anything but good, Tyler? That’s a great question. The answer is that you can never go wrong by following the data; however, it’s easy to be misled by what we think is intuitively the right decision.

Below, I’ll highlight some of the common ways that A/B testing is often used improperly, and highlight a model that can be used more effectively.

When testing works

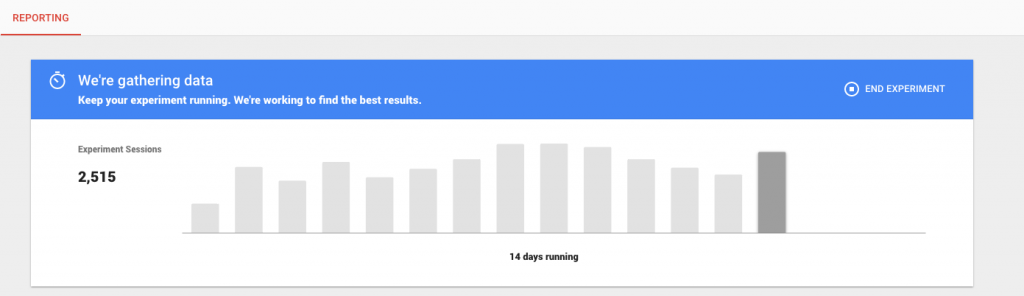

When performed correctly, any test with a sufficient amount of data should allow you to make objective decisions that ultimately move your website towards your end goal; whether that be [improved UX, increased revenue](https://blog.ezoic.com/user-engagement-imapcts-ad-revenue/), more form fills, or something else. The problem that many publishers are discovering about A/B testing — in particular —is that it is impossible to scale.

In the past 5 years, user behavior has begun an unprecedented shift. Due to increased use of mobile devices and improvements in connectivity, user behavior is no longer as predictable as it once was. While there are many variables to measure, **data scientists have learned that the following visitor conditions have the largest impact on how a user responds to different website elements…**

- internet connection type/speed

- geo-location

- device type

- time of day

- referral source

The flaw in A/B website testing

The application of contextual testing

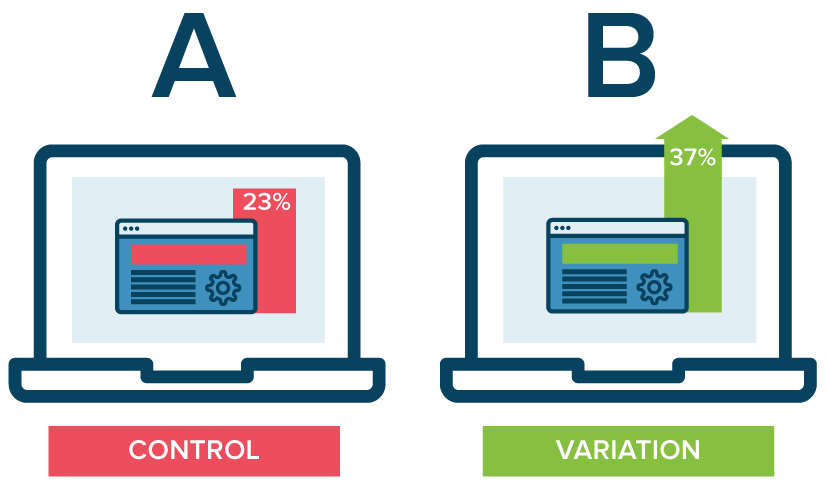

The implementation should apply the same type of methodology. Visitors that prefer A should get A, and visitors that prefer B should get B. There isn’t enough data on individual users to do this on a per visitors basis; however, you can easily use these categories to implement them across segments/buckets.

- geo-location

- UTM source

- time of day

- device type

- avg. user pageload speed

**To do this, a webmaster would need to…**

1. **proxy the _control_ page**

2. **pick page variants that you would like to partition traffic to**

3. **measure the results on a per segment basis**

4. **deliver the results via the proxied page to only the segments in which variables were clear winners**

There you have it. That’s a better way to do A/B testing. It’s still not as good as [true-to-form multivariate testing](/?page_id=1598), but it is a much more effective and modern way of approaching how a website should be testing.

Website elements worth testing

- menu location

- background color

- text size and color

- other navigational elements

- [ad placements](/?page_id=1148)

- [layouts](/?page_id=1174)

- image locations

These are the elements that we have seen have the largest impacts on those core user experience metrics over time. Keep in mind that they should be tested across all devices.

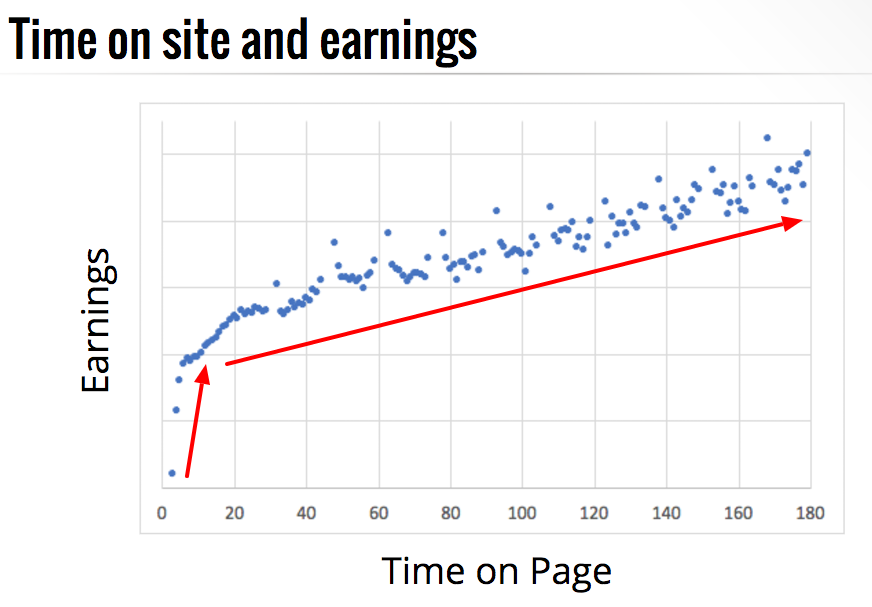

How testing affects revenue

Always follow the data

Ultimately, data and proper testing will always lead us in the right direction. The hard part is conducting sufficient tests and contextualizing the data in a way that keeps us from being misled.

Questions, thoughts, rebuttals? Keep the conversation going below.